This document details basic procedures for system administrators managing, maintaining, configuring and deploying Rhino 2.6 using the command-line console. To manage using a web interface, see the Rhino Element Manager documentation.

Topics

Administrative tasks for day-to-day management of the Rhino SLEE, its components and entities deployed in it, including: operational state, deployable units, services, resource adaptor entities, per-node activation state, profile tables and profiles, alarms, usage, user transactions, and component activation priorities. |

|

Procedures for configuring Rhino upon installation, and as needed (for example to tune performance), including: logging, staging, object pools, licenses, rate limiting, security and external databases. |

|

Finding Housekeeping MBeans, and finding, inspecting and removing one or all activities or SBB entities. |

|

Backing up and restoring the database, and exporting and importing SLEE deployment state. |

|

Managing the SNMP subsystem in Rhino, including: configuring the agent, managing MIB files, assigning OID mappings. |

|

Tools included with Rhino for system administrators to manage Rhino. |

Other documentation for the Rhino TAS can be found on the Rhino TAS product page.

SLEE Management

This section covers general administrative tasks for day-to-day management of the Rhino SLEE, its components and entities deployed in it.

JMX MBeans

Rhino SLEE uses Java Management Extension (JMX) MBeans for management functionality. This includes both functions defined in the JAIN SLEE 1.1 specification and Rhino extensions that allow additional functionality, beyond what’s in the specification.

Rhino’s command-line console is a front end for these MBeans, providing access to functions for managing:

Management may also be performed via the Rhino Element Manager web interface.

| |

See also Management Tools, and the Rhino Management Extension APIs. |

Namespaces

As well as an overview of Rhino namespaces, this section includes instructions for performing the following Rhino SLEE procedures, with explanations, examples, and links to related javadocs:

| Procedure | rhino-console command | MBean → Operation |

|---|---|---|

createnamespace |

Namespace Management → createNamespace |

|

removenamespace |

Namespace Management → removeNamespace |

|

listnamespaces |

Namespace Management → getNamespaces |

|

|

Setting the active namespace |

-n <namespace> (command-line option) setactivenamespace (interactive command) |

Namespace Management → setActiveNamespace |

|

Getting the active namespace |

|

Namespace Management → getActiveNamespace |

About Namespaces

A namespace is an independent deployment environment that is isolated from other namespaces.

A namespace has:

-

its own SLEE operational state

-

its own set of deployable units

-

its own set of instantiated profile tables, profiles, and resource adaptor entities

-

its own set of component configuration state

-

its own set of activation states for services and resource adaptor entities.

All of these things can be managed within an individual namespace without affecting the state of any other namespace.

| |

A namespace can be likened to a SLEE in itself, where Rhino with multiple namespaces is a container of SLEEs. |

A Rhino cluster always has a default namespace that cannot be deleted. Any number of user-defined namespaces may also be created, managed, and subsequently deleted when no longer needed.

Management clients by default interact with the default namespace unless they explicitly request to interact with a different namespace.

Creating a Namespace

To create a new namespace, use the following rhino-console command or related MBean operation.

Console command: createnamespace

Command |

createnamespace <name>

Description

Create a new deployment namespace

|

|---|---|

Example |

$ ./rhino-console createnamespace testnamespace Namespace testnamespace created |

MBean operation: createNamespace

MBean |

|

|---|---|

Rhino extension |

public void createNamespace(String name)

throws NullPointerException, InvalidArgumentException,

NamespaceAlreadyExistsException, ManagementException;

|

Removing a Namespace

To remove an existing user-defined namespace, use the following rhino-console command or related MBean operation.

| |

The default namespace cannot be removed. |

| |

All deployable units (other than the deployable unit containing the standard JAIN SLEE-defined types) must be uninstalled and all profile tables removed from a namespace before that namespace can be removed. |

Console command: removenamespace

Command |

removenamespace <name>

Description

Remove an existing deployment namespace

|

|---|---|

Example |

$ ./rhino-console removenamespace testnamespace Namespace testnamespace removed |

MBean operation: removeNamespace

MBean |

|

|---|---|

Rhino extension |

public void removeNamespace(String name)

throws NullPointerException, UnrecognizedNamespaceException,

InvalidStateException, ManagementException;

|

Listing Namespaces

To list all user-defined namespaces in a SLEE, use the following rhino-console command or related MBean operation.

Console command: listnamespaces

Command |

listnamespaces

Description

List all deployment namespaces

|

|---|---|

Example |

$ ./rhino-console listnamespaces testnamespace |

MBean operation: getNamespaces

MBean |

|

|---|---|

Rhino extension |

public String[] getNamespaces() throws ManagementException; This operation returns the names of the user-defined namespaces that have been created. |

Setting the Active Namespace

Each individual authenticated client connection to Rhino is associated with a namespace.

This setting, known as the active namespace, controls which namespace is affected by management commands such as those that install deployable units or change operational states.

To change the active namespace for a client connection, use the following rhino-console command or related MBean operation.

Console command: setactivenamespace

Command and command-line option |

Interactive mode

In interactive mode, the setactivenamespace <-default|name>

Description

Set the active namespace

Non-interactive mode

In non-interactive mode, the |

|---|---|

Example |

Interactive mode

$ ./rhino-console Interactive Rhino Management Shell Rhino management console, enter 'help' for a list of commands [Rhino@localhost (#0)] setactivenamespace testnamespace The active namespace is now testnamespace [Rhino@localhost [testnamespace] (#1)] setactivenamespace -default The active namespace is now the default namespace [Rhino@localhost (#2)]

Non-interactive mode

$ ./rhino-console -n testnamespace start The active namespace is now testnamespace Starting SLEE on node(s) [101] SLEE transitioned to the Starting state on node 101 |

MBean operation: setActiveNamespace

MBean |

|

|---|---|

Rhino extension |

public void setActiveNamespace(String name)

throws NoAuthenticatedSubjectException, UnrecognizedNamespaceException,

ManagementException;

This operation sets the active namespace for the client connection. A |

Getting the Active Namespace

To get the active namespace for a client connection, use the following rhino-console information and related MBean operation.

Console:

Command prompt information |

The currently active namespace is reported in the command prompt within square brackets. If no namespace is reported, then the default namespace is active. |

|---|---|

Example |

$ ./rhino-console Interactive Rhino Management Shell Rhino management console, enter 'help' for a list of commands [Rhino@localhost (#0)] setactivenamespace testnamespace The active namespace is now testnamespace [Rhino@localhost [testnamespace] (#1)] setactivenamespace -default The active namespace is now the default namespace [Rhino@localhost (#2)] |

MBean operation: getActiveNamespace

MBean |

|

|---|---|

Rhino extension |

public String getActiveNamespace() throws NoAuthenticatedSubjectException, ManagementException; This operation returns the name of the namespace currently active for the client connection. |

Operational State

As well as an overview of SLEE operational states, this section includes instructions for performing the following Rhino SLEE procedures, with explanations, examples and links to related javadocs:

| Procedure | rhino-console command | MBean → Operation |

|---|---|---|

start |

SLEE Management → start |

|

stop |

SLEE Management → stop |

|

|

Retrieving the basic operational state of nodes |

state |

SLEE Management → getState |

|

Retrieving detailed information for every node in the cluster |

getState |

Rhino Housekeeping → getClusterState |

|

Gracefully shutting nodes down |

shutdown |

SLEE Management → shutdown |

|

Forcefully terminating nodes |

kill |

SLEE Management → kill |

|

Gracefully rebooting nodes |

reboot |

SLEE Management → reboot |

|

Enabling the symmetric activation state mode |

enablesymmetricactivationstatemode |

Runtime Config Management → enableSymmetricActivationStateMode |

|

Disabling the symmetric activation state mode |

disablesymmetricactivationstatemode |

Runtime Config Management → disableSymmetricActivationStateMode |

|

Getting the current activation state mode |

getsymmetricactivationstatemode |

Runtime Config Management → isSymmetricActivationStateModeEnabled |

|

Listing nodes with per-node activation state |

getnodeswithpernodeactivationstate |

Node Housekeeping → getNodesWithPerNodeActivationState |

|

Copying per-node activation state to another node |

copypernodeactivationstate |

Node Housekeeping → copyPerNodeActivationState |

|

Removing per-node activation state |

removePerNodeActivationState |

Node Housekeeping → removePerNodeActivationState |

About SLEE Operational States

The SLEE specification defines the operational lifecycle of a SLEE — illustrated, defined, and summarised below.

SLEE lifecycle states

The SLEE lifecycle states are:

| State | Definition |

|---|---|

STOPPED |

The SLEE has been configured and initialised, and is ready to be started. Active resource adaptor entities have been loaded and initialised, and SBBs corresponding to active services have been loaded and are ready to be instantiated. The entire event-driven subsystem, however, is idle: resource adaptor entities and the SLEE are not actively producing events, the event router is not processing work, and the SLEE is not creating SBB entities. |

STARTING |

Resource adaptor entities in the SLEE that have been recorded in the management database as being in the ACTIVE state are started. The SLEE still does not create SBB entities. The node automatically transitions from this state to the RUNNING state when all startup tasks are complete, or to the STOPPING state if a startup task fails. |

RUNNING |

Activated resource adaptor entities in the SLEE can fire events, and the SLEE creates SBB entities and delivers events to them as needed. |

STOPPING |

Identical to the RUNNING state, except resource adaptor entities do not create (and the SLEE does not accept) new activity objects. Existing activity objects can end (according to the resource adaptor specification). The node automatically transitions out of this state, returning to the STOPPED state, when all SLEE activities have ended. The node can transition to this state directly from the STARTING state, effective immediately, if it has no activity objects. |

Independent SLEE states

Each namespace in each event-router node in a Rhino cluster maintains its own SLEE-lifecycle state machine, independent from other namespaces on the same or other nodes in the cluster. For example:

-

the default namespace on one node in a cluster might be in the RUNNING state

-

while a user-defined namespace on the same node is in the STOPPED state

-

while the default namespace on another node is in the STOPPING state

-

and the user-defined namespace on that node is in the RUNNING state.

The operational state of each namespace on each cluster node persists to the disk-based database.

Bootup SLEE state

After completing bootup and initialisation, a namespace on a node will enter the STOPPED state if:

-

the database has no persistent operational state information for that namespace on that node;

-

the namespace’s persistent operational state is STOPPED on that node; or

-

the node was started with the

-xoption (see Start Rhino in the Rhino Getting Started Guide).

Otherwise, the namespace will return to the same operational state that it was last in, as recorded in persistent storage.

Changing a namespace’s operational state

You can change the operational state of any namespace on any node at any time, as long as least one node in the cluster is available to perform the management operation (regardless of whether or not the node whose operational state being changed is a current cluster member). For example, you might set the operational state of the default namespace on node 103 to RUNNING before node 103 is started — then, when node 103 is started, after it completes initialising, the default namespace will enter the RUNNING state.

| |

Changing a quorum node’s operational state

You can also change the operational state of a node which is a current member of the cluster as a quorum node… but quorum nodes make no use of operational state information stored in the database, and will not respond to operational state changes. (A node only uses operational state information if it starts as a regular event-router node.) |

Starting the SLEE

To start a SLEE on one or more nodes, use the following rhino-console command or related MBean operation.

Console command: start

Command |

start [-nodes node1,node2,...] [-ifneeded]

Description

Start the SLEE (on the specified nodes)

|

|---|---|

Example |

To start nodes 101 and 102: $ ./rhino-console start -nodes 101,102 Starting SLEE on node(s) [101,102] SLEE transitioned to the Starting state on node 101 SLEE transitioned to the Starting state on node 102 |

MBean operation: start

MBean |

|

|---|---|

SLEE-defined |

Start all nodes

public void start() throws InvalidStateException, ManagementException; Rhino’s implementation of the SLEE-defined |

Rhino extension |

Start specific nodes

public void start(int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

InvalidStateException, ManagementException;

Rhino provides an extension that adds an argument which lets you control which nodes to start (by specifying node IDs). For this to work, the specified nodes must be in the STOPPED state. |

Stopping the SLEE

To stop SLEE event-routing functions on one or more nodes, use the following rhino-console command or related MBean operation.

Console command: stop

Command |

stop [-nodes node1,node2,...] [-reassignto -node3,node4,...] [-ifneeded]

Description

Stop the SLEE (on the specified nodes (reassigning replicated activities to the

specified nodes))

|

||

|---|---|---|---|

Examples |

To stop nodes 101 and 102: $ ./rhino-console stop -nodes 101,102 Stopping SLEE on node(s) [101,102] SLEE transitioned to the Stopping state on node 101 SLEE transitioned to the Stopping state on node 102 To stop only node 101 and reassign replicated activities to node 102: $ ./rhino-console stop -nodes 101 -reassignto 102 Stopping SLEE on node(s) [101] SLEE transitioned to the Stopping state on node 101 Replicated activities reassigned to node(s) [102] To stop node 101 and distribute replicated activities of each replicating resource adaptor entity to all other eligible nodes (those on which the resource adaptor entity is in the ACTIVE state and the SLEE is in the RUNNING state), specify an empty (zero-length) argument for the $ ./rhino-console stop -nodes 101 -reassignto "" Stopping SLEE on node(s) [101] SLEE transitioned to the Stopping state on node 101 Replicated activities reassigned to node(s) [102,103]

|

MBean operation: stop

MBean |

|

|---|---|

SLEE-defined |

Stop all nodes

public void stop() throws InvalidStateException, ManagementException; Rhino’s implementation of the SLEE-defined |

Rhino extensions |

Stop specific nodes

public void stop(int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

InvalidStateException, ManagementException;

Rhino provides an extension that adds an argument which lets you control which nodes to stop (by specifying node IDs). For this to work, specified nodes must begin in the RUNNING state.

Reassign activities to other nodes

public void stop(int[] stopNodeIDs, int[] reassignActivitiesToNodeIDs)

throws NullPointerException, InvalidArgumentException,

InvalidStateException, ManagementException;

Rhino also provides an extension that adds another argument, which lets you reassign ownership of replicated activities (from replicating resource adaptor entities) from the stopping nodes, distributing the activities of each resource adaptor entity equally among other event-router nodes where the resource adaptor entity is eligible to adopt them. With a smaller set of activities, the resource adaptor entities on the stopping nodes can more quickly return to the INACTIVE state (which is required for the SLEE to transition from the STOPPING to the STOPPED state). This only works for resource adaptor entities that are replicating activity state (see the description of the "Rhino-defined configuration property" on the MBean tab on Creating a Resource Adaptor Entity). See also Reassigning a Resource Adaptor Entity’s Activities to Other Nodes, in particular the Requirements tab. |

Basic Operational State of a Node

To retrieve the basic operational state of a node, use the following rhino-console command or related MBean operation.

Console command: state

Command |

state [-nodes node1,node2,...]

Description

Get the state of the SLEE (on the specified nodes)

|

|---|---|

Output |

The |

Examples |

To display the state of only node 101: $ ./rhino-console state -nodes 101 Node 101 is Stopped To display the state of every event-router node: $ ./rhino-console state Node 101 is Stopped Node 102 is Running |

MBean operation: getState

MBean |

|

|---|---|

SLEE-defined |

Return state of current node

public SleeState getState() throws ManagementException; Rhino’s implementation of the SLEE-defined |

Rhino extension |

Return state of specific nodes

public SleeState[] getState(int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

ManagementException;

Rhino provides an extension that adds an argument which lets you control which nodes to examine (by specifying node IDs). |

Detailed Information for Every Node in the Cluster

To retrieve detailed information for every node in the cluster (including quorum nodes), use the following rhino-console command or related MBean operation.

Console command: getclusterstate

Command |

getclusterstate

Description

Display the current state of the Rhino Cluster

|

|---|---|

Output |

For every node in the cluster, retrieves detailed information on the:

|

Example |

$ ./rhino-console getclusterstate

node-id active-alarms host node-type slee-state start-time up-time

-------- -------------- ----------------- ------------- ----------- ------------------ -----------------

101 0 host1.domain.com event-router Stopped 20080327 12:16:26 0days,2h,40m,3s

102 0 host2.domain.com event-router Running 20080327 12:16:30 0days,2h,39m,59s

103 0 host3.domain.com quorum n/a 20080327 14:36:25 0days,0h,20m,4s

|

MBean operation: getClusterState

MBean |

|

|---|---|

Rhino extension |

public TabularData getClusterState() throws ManagementException; (Refer to the |

| |

See also Basic operational state of a node. |

Terminating Nodes

To terminate cluster nodes, you can:

| |

You can stop functions on nodes and nodes themselves, by:

See also Stop Rhino in the Getting Started Guide, which details using the |

Shut Down Gracefully

To gracefully shut down one or more nodes, use the following rhino-console command or related MBean operation.

Console command: shutdown

Command |

shutdown [-nodes node1,node2,...] [-timeout timeout] [-restart]

Description

Gracefully shutdown the SLEE (on the specified nodes). The optional timeout is

specified in seconds. Optionally restart the node(s) after shutdown

|

|---|---|

Examples |

To shut down the entire cluster: $ ./rhino-console shutdown Shutting down the SLEE Shutdown successful To shut down only node 102: $ ./rhino-console shutdown -nodes 102 Shutting down node(s) [102] Shutdown successful |

MBean operation: shutdown

MBean |

|

|---|---|

SLEE-defined |

Shut down all nodes

public void shutdown() throws InvalidStateException, ManagementException; Rhino’s implementation of the SLEE-defined |

Rhino extension |

Shut down specific nodes

public void shutdown(int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

InvalidStateException, ManagementException;

Rhino provides an extension that adds an argument which lets you control which nodes to shut down (by specifying node IDs). |

| |

Event-router nodes can only be shut down when STOPPED — if any in the set of nodes that a shutdown operation targets is not in the STOPPED state, the shutdown operation will fail (and no nodes will shut down). Quorum nodes can be shut down at any time. |

Forcefully Terminate

To forcefully terminate a cluster node that is in any state where it can respond to management operations, use the following rhino-console command or related MBean operation.

Console command: kill

Command |

kill -nodes node1,node2,...

Description

Forcefully terminate the specified nodes (forces them to become non-primary)

|

|---|---|

Example |

To forcefully terminate nodes 102 and 103: $ ./rhino-console kill -nodes 102,103 Terminating node(s) [102,103] Termination successful |

MBean operation: kill

MBean |

|

|---|---|

Rhino operation |

public void kill(int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

ManagementException;

Rhino’s |

| |

Application state may be lost

Killing a node is not recommended — forcibly terminated nodes lose all non-replicated application state. |

Rebooting Nodes

To gracefully reboot one or more nodes, use the following rhino-console command or related MBean operation.

Console command: reboot

Command |

reboot [-nodes node1,node2,...] {-state state | -states state1,state2,...}

Description

Gracefully shutdown the SLEE (on the specified nodes), restarting into either

the running or stopped state.

Use either the -state argument to set the state for all nodes or the -states

argument to set it separately for each node rebooted.

If a list of nodes is not provided then -state must be used to set the state of

all nodes.

Valid states are (r)unning and (s)topped

|

|---|---|

Examples |

To reboot the entire cluster: $ ./rhino-console reboot -states running,running,stopped Restarting node(s) [101,102,103] (using Rhino's default shutdown timeout) Restarting To shut down only node 102: $ ./rhino-console reboot -nodes 102 -states stopped Restarting node(s) [102] (using Rhino's default shutdown timeout) Restarting |

MBean operation: reboot

MBean |

|

|---|---|

Rhino extension |

Reboot all nodes

public void reboot(SleeState[] states) throws InvalidArgumentException, InvalidStateException, ManagementException; Reboots every node in the cluster to the state specified. |

Rhino extension |

Reboot specific nodes

public void reboot(int[] nodeIDs, SleeState[]) throws NullPointerException, InvalidArgumentException, InvalidStateException, ManagementException; Extension to reboot that adds an argument which lets you control which nodes to shut down (by specifying node IDs). |

| |

Event-router nodes can restart to either the RUNNING state or the STOPPED state. Quorum nodes must have a state provided but do not use this in operation. |

Activation State

As well as an overview of activation state modes, this section includes instructions for performing the following Rhino SLEE procedures, with explanations, examples, and links to related javadocs:

| Procedure | rhino-console command | MBean → Operation |

|---|---|---|

|

Enabling the symmetric activation state mode |

enablesymmetricactivationstatemode |

Runtime Config Management → enableSymmetricActivationStateMode |

|

Disabling the symmetric activation state mode |

disablesymmetricactivationstatemode |

Runtime Config Management → disableSymmetricActivationStateMode |

|

Getting the current activation state mode |

getsymmetricactivationstatemode |

Runtime Config Management → isSymmetricActivationStateModeEnabled |

|

Listing nodes with per-node activation state |

getnodeswithpernodeactivationstate |

Node Housekeeping → getNodesWithPerNodeActivationState |

|

Copying per-node activation state to another node |

copypernodeactivationstate |

Node Housekeeping → copyPerNodeActivationState |

|

Removing per-node activation state |

removePerNodeActivationState |

Node Housekeeping → removePerNodeActivationState |

About Activation State Modes

Rhino has two modes of operation for managing the activation state of services and resource adaptor entities: per-node and symmetric.

The activation state for the SLEE event-routing functions is always maintained on a per-node basis.

Per-node activation state

In the per-node activation state mode, Rhino maintains activation state for the installed services and created resource adaptor entities in a namespace on a per-node basis. That is, the SLEE records separate activation state information for each individual cluster node.

The per-node activation state mode is the default mode in a newly installed Rhino cluster.

Symmetric activation state

In the symmetric activation state mode, Rhino maintains a single cluster-wide activation state view for each installed service and created resource adaptor entity. So, for example, if a service is activated, then it is simultaneously activated on every cluster node. If a new node joins the cluster, then the services and resource adaptor entities on that node each enter the same activation state as for existing cluster nodes.

| |

MBean operations affecting the activation state of services or resource adaptor entities that accept a list of node IDs to apply the operation to, such as the Similary, MBean operations that inspect or manage per-node activation state, such as the |

Symmetric Activation State

This section includes instructions for performing the following Rhino SLEE procedures, with explanations, examples and links to related javadocs:

| Procedure | rhino-console command | MBean → Operation |

|---|---|---|

enablesymmetricactivationstatemode |

Runtime Config Management → enableSymmetricActivationStateMode |

|

disablesymmetricactivationstatemode |

Runtime Config Management → disableSymmetricActivationStateMode |

|

getsymmetricactivationstatemode |

Runtime Config Management → isSymmetricActivationStateModeEnabled |

Enable Symmetric Activation State Mode

To switch from the per-node activation state mode to the symmetric activation state mode, use the following rhino-console command or related MBean operation.

Console command: enablesymmetricactivationstatemode

Command |

enablesymmetricactivationstatemode <template-node-id>

Description

Enable symmetric activation state mode of services and resource adaptor entities

across the cluster. Components across the cluster will assume the state of the

specified template node

|

|---|---|

Example |

$ ./rhino-console enablesymmetricactivationstatemode 101 Symmetric activation state mode enabled using node 101 as a template |

MBean operation: enableSymmetricActivationStateMode

MBean |

|

|---|---|

Rhino operation |

public void enableSymmetricActivationStateMode(int templateNodeID)

throws InvalidStateException, InvalidArgumentException,

ConfigurationException;

This operation activates and/or deactivates services and resource adaptor entities on other cluster nodes such that their activation state matches that of the template node. If a service or resource adaptor entity is deactivated on a node where the SLEE operational state is RUNNING or STOPPING, that service or resource adaptor entity will enter the STOPPING state and be allowed to drain before it transitions to the INACTIVE state. |

Disable Symmetric Activation State Mode

To switch from the symmetric activation state mode back to the per-node activation state mode, use the following rhino-console command or related MBean operation.

Console command: disablesymmetricactivationstatemode

Command |

disablesymmetricactivationstatemode

Description

Disable symmetric activation state mode of services and resource adaptor

entities across the cluster, such that components may have different activation

states on different nodes

|

|---|---|

Example |

$ ./rhino-console disablesymmetricactivationstatemode Symmetric activation state mode disabled |

MBean operation: disableSymmetricActivationStateMode

MBean |

|

|---|---|

Rhino operation |

public void disableSymmetricActivationStateMode()

throws InvalidStateException, ConfigurationException;

This operation disables the symmetric activation state mode, thus restoring the per-node activation state mode. The existing activation states of services and resource adaptor entities are not affected by this operation — the per-node activation state of each service and resource adaptor entity is simply set to the current corresponding symmetric state. |

Get the Current Activation State Mode

To determine if the symmetric activation state mode is enabled or disabled, use the following rhino-console command or related MBean operation.

If the symmetric activation state mode is disabled, then the per-node activation state mode consequently must be in force.

Console command: getsymmetricactivationstatemode

Command |

getsymmetricactivationstatemode

Description

Display the current status of the symmetric activation state mode

|

|---|---|

Example |

$ ./rhino-console getsymmetricactivationstatemode Symmetric activation state mode is currently enabled |

MBean operation: isSymmetricActivationStateModeEnabled

MBean |

|

|---|---|

Rhino operation |

public boolean isSymmetricActivationStateModeEnabled()

throws ConfigurationException;

|

Per-Node Activation State

This section includes instructions for performing the following Rhino SLEE procedures, with explanations, examples, and links to related javadocs.

| Procedure | rhino-console command | MBean → Operation |

|---|---|---|

getnodeswithpernodeactivationstate |

Node Housekeeping → getNodesWithPerNodeActivationState |

|

copypernodeactivationstate |

Node Housekeeping → copyPerNodeActivationState |

|

removepernodeactivationstate |

Node Housekeeping → removePerNodeActivationState |

| |

See also Finding Housekeeping MBeans. |

Listing Nodes with Per-Node Activation State

To get a list of nodes with per-node activation state, use the following rhino-console command or related MBean operation.

Console command: getnodeswithpernodeactivationstate

Command |

getnodeswithpernodeactivationstate

Description

Get the set of nodes for which per-node activation state exists

|

|---|---|

Example |

$ ./rhino-console getnodeswithpernodeactivationstate Nodes with per-node activation state: [101,102,103] |

MBean operation: getNodesWithPerNodeActivationState

MBean |

|

|---|---|

Rhino operation |

public int[] getNodesWithPerNodeActivationState()

throws ManagementException;

This operation returns an array, listing the cluster node IDs for nodes that have per-node activation state recorded in the database). |

Copying Per-Node Activation State to Another Node

To copy per-node activation state from one node to another, use the following rhino-console command or related MBean operation.

Console command: copypernodeactivationstate

Command |

copypernodeactivationstate <from-node-id> <to-node-id>

Description

Copy per-node activation state from one node to another

|

||

|---|---|---|---|

Example |

To copy the per-node activation state from node 101 to node 102: $ ./rhino-console copypernodeactivationstate 101 102 Per-node activation state copied from 101 to 102

|

MBean operation: copyPerNodeActivationState

MBean |

|

|---|---|

Rhino operation |

public boolean copyPerNodeActivationState(int targetNodeID)

throws UnsupportedOperationException, InvalidArgumentException,

InvalidStateException, ManagementException;

This operation:

|

| |

The start-rhino.sh command with the Production version of Rhino also includes an option (-c) to copy per-node activation state from another node to the booting node as it initialises. (See Start Rhino in the Getting Started Guide.) |

Removing Per-Node Activation State

To remove per-node activation state, use the following rhino-console command or related MBean operation.

Console command: removepernodeactivationstate

Command |

removepernodeactivationstate <from-node-id>

Description

Remove per-node activation state from a node

|

||

|---|---|---|---|

Example |

To remove per-node activation state from node 103: $ ./rhino-console removepernodeactivationstate 103 Per-node activation state removed from 103

|

MBean operation: removePerNodeActivationState

MBean |

|

|---|---|

Rhino operation |

public boolean removePerNodeActivationState()

throws UnsupportedOperationException, InvalidStateException,

ManagementException;

This operation:

|

| |

The start-rhino.sh command with the Production version of Rhino also includes an option (-d) to remove per-node activation state from the booting node as it initialises. (See Start Rhino in the Getting Started Guide.) |

Startup and Shutdown Priority

Startup and shutdown priorities should be set when resource adaptors and services need to be activated or deactivated in a particular order when the SLEE is started or stopped. For example, the resource adaptors responsible for writing Call Detail Records often need to be deactivated last.

Valid priorities are between -128 and 127. Startup and shutdown occur from highest to lowest priority.

Console commands

Console command: getraentitystartingpriority

Command |

getraentitystartingpriority <entity-name>

Description

Get the starting priority for a resource adaptor entity

|

|---|---|

Examples |

./rhino-console getraentitystartingpriority sipra Resource adaptor entity sipra activation priority is currently 0 |

Console command: getraentitystoppingpriority

Command |

getraentitystoppingpriority <entity-name>

Description

Get the stopping priority for a resource adaptor entity

|

|---|---|

Examples |

./rhino-console getraentitystoppingpriority sipra Resource adaptor entity sipra deactivation priority is currently 0 |

Console command: getservicestartingpriority

Command |

getservicestartingpriority <service-id>

Description

Get the starting priority for a service

|

|---|---|

Examples |

./rhino-console getservicestartingpriority name=SIP\ Presence\ Service,vendor=OpenCloud,version=1.1 Service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] activation priority is currently 0 |

Console command: getservicestoppingpriority

Command |

getservicestoppingpriority <service-id>

Description

Get the stopping priority for a service

|

|---|---|

Examples |

./rhino-console getservicestoppingpriority name=SIP\ Presence\ Service,vendor=OpenCloud,version=1.1 Service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] deactivation priority is currently 0 |

Console command: setraentitystartingpriority

Command |

setraentitystartingpriority <entity-name> <priority>

Description

Set the starting priority for a resource adaptor entity. The priority must be

between -128 and 127 and higher priority values have precedence over lower

priority values

|

|---|---|

Examples |

./rhino-console setraentitystartingpriority sipra 127 Resource adaptor entity sipra activation priority set to 127 ./rhino-console setraentitystartingpriority sipra -128 Resource adaptor entity sipra activation priority set to -128 |

Console command: setraentitystoppingpriority

Command |

setraentitystoppingpriority <entity-name> <priority>

Description

Set the stopping priority for a resource adaptor entity. The priority must be

between -128 and 127 and higher priority values have precedence over lower

priority values

|

|---|---|

Examples |

./rhino-console setraentitystoppingpriority sipra 127 Resource adaptor entity sipra deactivation priority set to 127 ./rhino-console setraentitystoppingpriority sipra -128 Resource adaptor entity sipra deactivation priority set to -128 |

Console command: setservicestartingpriority

Command |

setservicestartingpriority <service-id> <priority>

Description

Set the starting priority for a service. The priority must be between -128 and

127 and higher priority values have precedence over lower priority values

|

|---|---|

Examples |

./rhino-console setservicestartingpriority name=SIP\ Presence\ Service,vendor=OpenCloud,version=1.1 127 Service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] activation priority set to 127 ./rhino-console setservicestartingpriority name=SIP\ Presence\ Service,vendor=OpenCloud,version=1.1 -128 Service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] activation priority set to -128 |

Console command: setservicestoppingpriority

Command |

setservicestoppingpriority <service-id> <priority>

Description

Set the stopping priority for a service. The priority must be between -128 and

127 and higher priority values have precedence over lower priority values

|

|---|---|

Examples |

./rhino-console setservicestoppingpriority name=SIP\ Presence\ Service,vendor=OpenCloud,version=1.1 127 Service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] deactivation priority set to 127 ./rhino-console setservicestoppingpriority name=SIP\ Presence\ Service,vendor=OpenCloud,version=1.1 -128 Service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] deactivation priority set to -128 |

MBean operations

Services

MBean |

|

|---|---|

Rhino extensions |

getStartingPriority

byte getStartingPriority(ServiceID service)

throws NullPointerException, UnrecognizedServiceException, ManagementException;

getStartingPriorities

Byte[] getStartingPriorities(ServiceID[] services)

throws NullPointerException, ManagementException;

getStoppingPriority

byte getStoppingPriority(ServiceID service) throws NullPointerException, UnrecognizedServiceException, ManagementException;

getStoppingPriorities

Byte[] getStoppingPriorities(ServiceID[] services)

throws NullPointerException, ManagementException;

setStartingPriority

void setStartingPriority(ServiceID service, byte priority)

throws NullPointerException, UnrecognizedServiceException, ManagementException;

setStoppingPriority

void setStoppingPriority(ServiceID service, byte priority)

throws NullPointerException, UnrecognizedServiceException, ManagementException;

|

Resource Adaptors

MBean |

|

|---|---|

Rhino extensions |

getStartingPriority

byte getStartingPriority(String entityName)

throws NullPointerException, UnrecognizedResourceAdaptorEntityException, ManagementException;

getStartingPriorities

Byte[] getStartingPriorities(String[] entityNames)

throws NullPointerException, ManagementException;

getStoppingPriority

byte getStoppingPriority(String entityName) throws NullPointerException, UnrecognizedResourceAdaptorEntityException, ManagementException;

getStoppingPriorities

Byte[] getStoppingPriorities(String[] entityNames)

throws NullPointerException, ManagementException;

setStartingPriority

void setStartingPriority(String entityName, byte priority)

throws NullPointerException, UnrecognizedResourceAdaptorEntityException, ManagementException;

setStoppingPriority

void setStoppingPriority(String entityName, byte priority)

throws NullPointerException, UnrecognizedResourceAdaptorEntityException, ManagementException;

|

Deployable Units

As well as an overview of deployable units, this section includes instructions for performing the following Rhino SLEE procedures with explanations, examples and links to related javadocs:

| Procedure | rhino-console command(s) | MBean → Operation |

|---|---|---|

install installlocaldu |

DeploymentMBean → install |

|

uninstall |

DeploymentMBean → uninstall |

|

listdeployableunits |

DeploymentMBean → getDeployableUnits |

About Deployable Units

Below are a definition, preconditions for installing and uninstalling, and an example of a deployable unit.

What is a deployable unit?

A deployable unit is a jar file that can be installed in the SLEE. It contains:

-

a deployment descriptor

-

constituent jar files, with Java class files and deployment descriptors for components such as:

-

SBBs

-

events

-

profile specifications

-

resource adaptor types

-

resource adaptors

-

libraries

-

-

XML files for services.

| |

The JAIN SLEE 1.1 specification defines the structure of a deployable unit. |

Installing and uninstalling deployable units

You must install and uninstall deployable units in a particular order, according to the dependencies of the SLEE components they contain. You cannot install a deployable unit unless either it contains all of its dependencies, or they are already installed. For example, if your deployable unit contains an SBB which depends on a library jar, the library jar must either already be installed in the SLEE, or be included in that same deployable unit jar.

Pre-conditions

A deployable unit cannot be installed if any of the following is true:

-

A deployable unit with the same URL has already been installed in the SLEE.

-

The deployable unit contains a component with the same name, vendor and version as a component of the same type that is already installed in the SLEE.

-

The deployable unit contains a component that references other components that are not yet installed in the SLEE and are not included in the deployable unit jar. (For example, an SBB component may reference event-type components and profile-specification components that are not included or pre-installed.)

A deployable unit cannot be uninstalled if either of the following is true:

-

There are any dependencies on any of its components from components in other installed deployable units. For example, if a deployable unit contains an SBB jar that depends on a profile-specification jar contained in a second deployable unit, the deployable unit containing the profile-specification jar cannot be uninstalled while the deployable unit containing the SBB jar remains installed.

-

There are "instances" of components contained in the deployable unit. For example, a deployable unit containing a resource adaptor cannot be uninstalled if the SLEE includes resource adaptor entities of that resource adaptor.

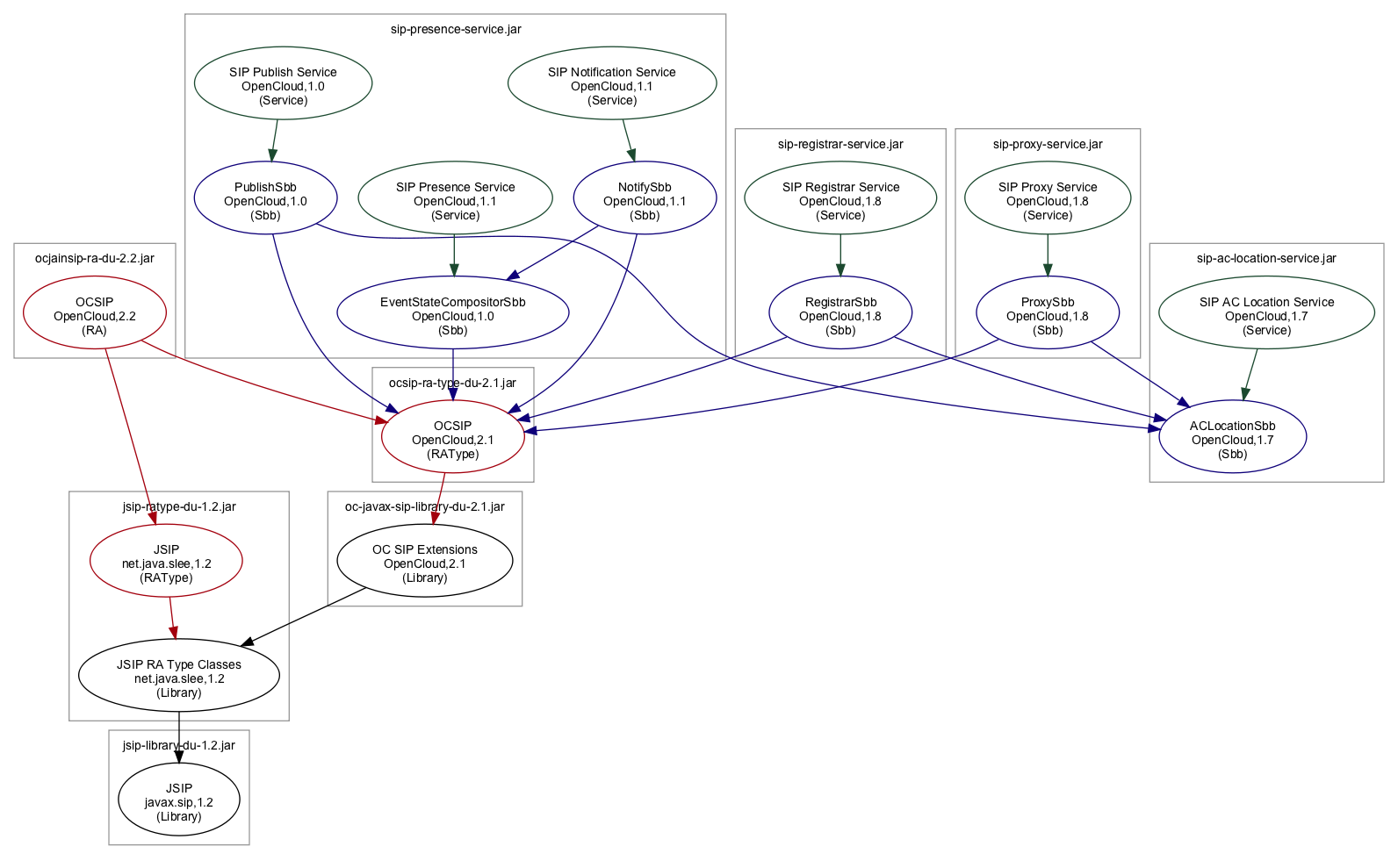

Deployable unit example

The following example illustrates the deployment descriptor for a deployable unit jar file:

<deployable-unit> <description> ... </description> ... <jar> SomeProfileSpec.jar </jar> <jar> BarAddressProfileSpec.jar </jar> <jar> SomeCustomEvent.jar </jar> <jar> FooSBB.jar </jar> <jar> BarSBB.jar </jar> ... <service-xml> FooService.xml </service-xml> ... </deployable-unit>

The content of the deployable unit jar file is as follows:

META-INF/deployable-unit.xml META-INF/MANIFEST.MF ... SomeProfileSpec.jar BarAddressProfileSpec.jar SomeCustomEvent.jar FooSBB.jar BarSBB.jar FooService.xml ...

Installing Deployable Units

To install a deployable unit, use the following rhino-console command or related MBean operation.

Console commands: install, installlocaldu

Commands |

Installing from a URL

install <url> [-type <type>] [-installlevel <level>]

Description

Install a deployable unit jar or other artifact. To install something other

than a deployable unit, the -type option must be specified. The -installlevel

option controls to what degree the deployable artifact is installed

Installing from a local file

installlocaldu <file url> [-type <type>] [-installlevel <level>] [-url url]

Description

Install a deployable unit or other artifact. This command will attempt to

forward the file content (by reading the file) to rhino if the management client

is on a different host. To install something other than a deployable unit, the

-type option must be specified. The -installlevel option controls to what

degree the deployable artifact is installed. The -url option allows the

deployment unit to be installed with an alternative URL identifier

|

|---|---|

Examples |

To install a deployable unit from a given URL: $ ./rhino-console install file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar installed: DeployableUnitID[url=file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar] To install a deployable unit from the local file system of the management client: $ ./rhino-console installlocaldu file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar installed: DeployableUnitID[url=file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar] |

MBean operation: install

MBean |

|

|---|---|

SLEE-defined |

Install a deployable unit from a given URL

public DeployableUnitID install(String url)

throws NullPointerException, MalformedURLException,

AlreadyDeployedException, DeploymentException,

ManagementException;

Installs the given deployable unit jar file into the SLEE. The given URL must be resolvable from the Rhino node. |

Rhino extension |

Install a deployable unit from a given byte array

public DeployableUnitID install(String url, byte[] content)

throws NullPointerException, MalformedURLException,

AlreadyDeployedException, DeploymentException,

ManagementException;

Installs the given deployable unit jar file into the SLEE. The caller passes the actual file contents of the deployable unit in a byte array as a parameter to this method. The SLEE then installs the deployable unit as if it were from the URL. |

Uninstalling Deployable Units

To uninstall a deployable unit, use the following rhino-console command or related MBean operation.

| |

A deployable unit cannot be uninstalled if it contains any components that any other deployable unit installed in the SLEE depends on. |

Console command: uninstall

Command |

uninstall <url>

Description

Uninstall a deployable unit jar

|

|---|---|

Examples |

To uninstall a deployable unit which was installed with the given URL: $ ./rhino-console uninstall file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar uninstalled: DeployableUnitID[url=file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar] |

Console command: cascadeuninstall

Command |

cascadeuninstall <type> <url|component-id> [-force] [-s]

Description

Cascade uninstall a deployable unit or copied component. The optional -force

argument prevents the command from prompting for confirmation before the

uninstall occurs. The -s argument removes the shadow from a shadowed component

and is not valid for deployable units

|

|---|---|

Examples |

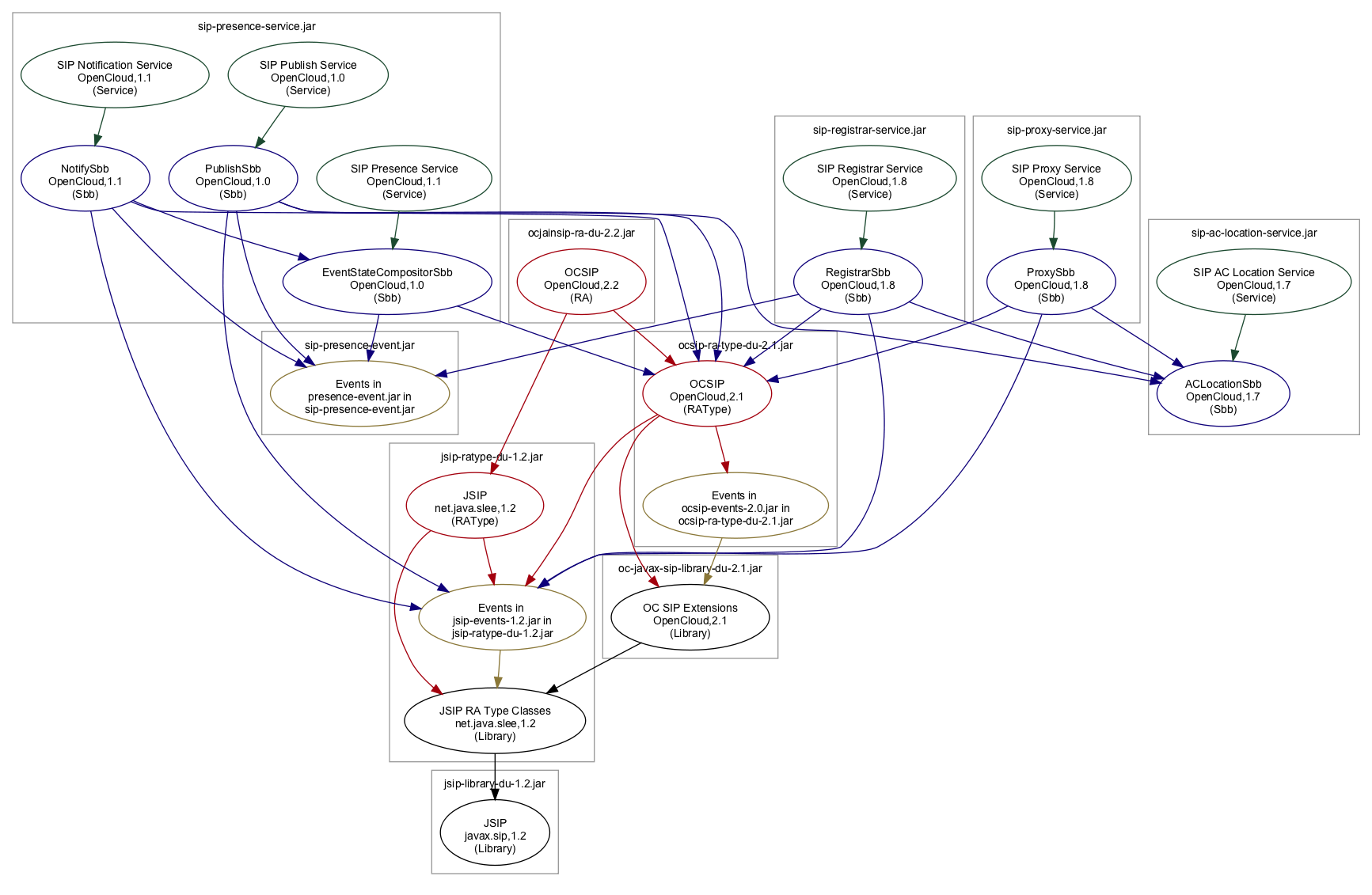

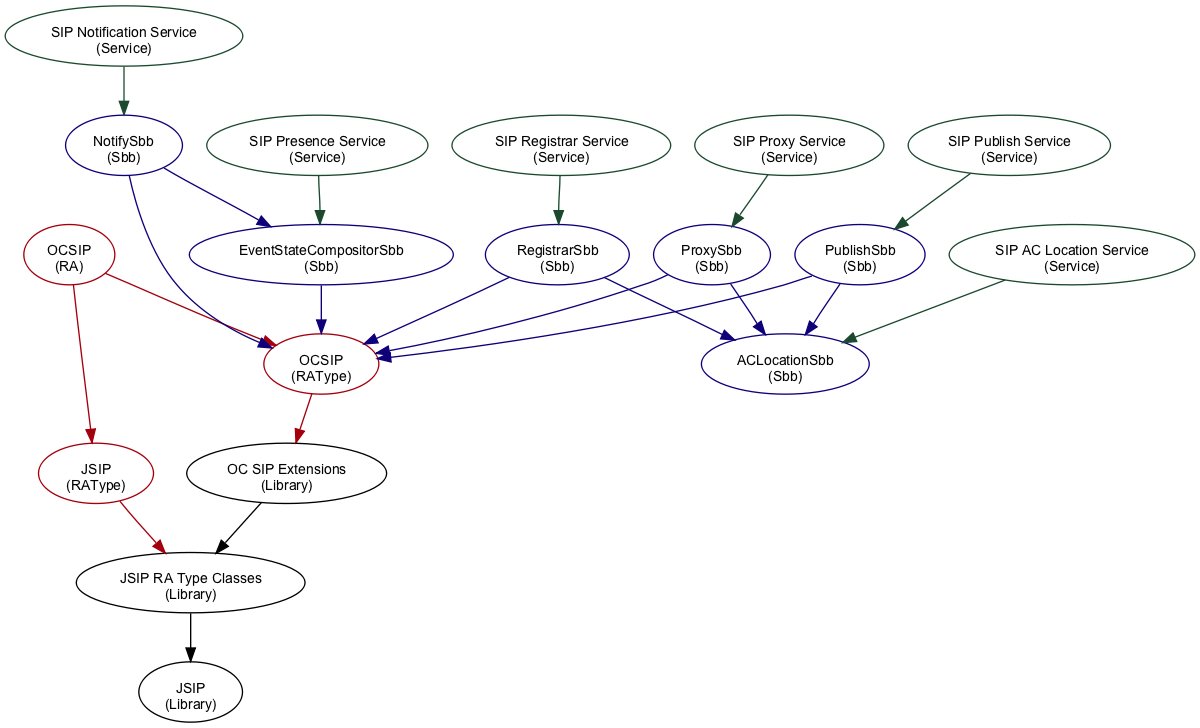

To uninstall a deployable unit which was installed with the given URL and all deployable units that depend on this: $ ./rhino-console cascadeuninstall du file:du/ocsip-ra-2.3.1.17.du.jar

Cascade removal of deployable unit file:du/ocsip-ra-2.3.1.17.du.jar requires the following operations to be performed:

Deployable unit file:jars/sip-registrar-service.jar will be uninstalled

SBB with SbbID[name=RegistrarSbb,vendor=OpenCloud,version=1.8] will be uninstalled

Service with ServiceID[name=SIP Registrar Service,vendor=OpenCloud,version=1.8] will be uninstalled

This service will first be deactivated

Deployable unit file:jars/sip-presence-service.jar will be uninstalled

SBB with SbbID[name=EventStateCompositorSbb,vendor=OpenCloud,version=1.0] will be uninstalled

SBB with SbbID[name=NotifySbb,vendor=OpenCloud,version=1.1] will be uninstalled

SBB with SbbID[name=PublishSbb,vendor=OpenCloud,version=1.0] will be uninstalled

Service with ServiceID[name=SIP Notification Service,vendor=OpenCloud,version=1.1] will be uninstalled

This service will first be deactivated

Service with ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1] will be uninstalled

This service will first be deactivated

Service with ServiceID[name=SIP Publish Service,vendor=OpenCloud,version=1.0] will be uninstalled

This service will first be deactivated

Deployable unit file:jars/sip-proxy-service.jar will be uninstalled

SBB with SbbID[name=ProxySbb,vendor=OpenCloud,version=1.8] will be uninstalled

Service with ServiceID[name=SIP Proxy Service,vendor=OpenCloud,version=1.8] will be uninstalled

This service will first be deactivated

Deployable unit file:du/ocsip-ra-2.3.1.17.du.jar will be uninstalled

Resource adaptor with ResourceAdaptorID[name=OCSIP,vendor=OpenCloud,version=2.3.1] will be uninstalled

Resource adaptor entity sipra will be removed

This resource adaptor entity will first be deactivated

Link name OCSIP bound to this resource adaptor entity will be removed

Continue? (y/n): y

Deactivating service ServiceID[name=SIP Registrar Service,vendor=OpenCloud,version=1.8]

Deactivating service ServiceID[name=SIP Notification Service,vendor=OpenCloud,version=1.1]

Deactivating service ServiceID[name=SIP Presence Service,vendor=OpenCloud,version=1.1]

Deactivating service ServiceID[name=SIP Publish Service,vendor=OpenCloud,version=1.0]

Deactivating service ServiceID[name=SIP Proxy Service,vendor=OpenCloud,version=1.8]

All necessary services are inactive

Deactivating resource adaptor entity sipra

All necessary resource adaptor entities are inactive

Uninstalling deployable unit file:jars/sip-registrar-service.jar

Uninstalling deployable unit file:jars/sip-presence-service.jar

Uninstalling deployable unit file:jars/sip-proxy-service.jar

Unbinding resource adaptor entity link name OCSIP

Removing resource adaptor entity sipra

Uninstalling deployable unit file:du/ocsip-ra-2.3.1.17.du.jar

|

MBean operation: uninstall

MBean |

|

|---|---|

SLEE-defined |

public void uninstall(DeployableUnitID id)

throws NullPointerException, UnrecognizedDeployableUnitException,

DependencyException, InvalidStateException, ManagementException;

Uninstalls the given deployable unit jar file (along with all the components it contains) out of the SLEE. |

Listing Deployable Units

To list the installed deployable units, use the following rhino-console command or related MBean operation.

Console command: listdeployableunits

Command |

listdeployableunits

Description

List the current installed deployable units

|

|---|---|

Example |

To list the currently installed deployable units: $ ./rhino-console listdeployableunits DeployableUnitID[url=file:/home/rhino/rhino/examples/sip-examples-2.0/lib/jsip-library-du-1.2.jar] DeployableUnitID[url=file:/home/rhino/rhino/lib/javax-slee-standard-types.jar] |

MBean operation: getDeployableUnits

MBean |

|

|---|---|

SLEE-defined |

public DeployableUnitID[] getDeployableUnits()

throws ManagementException;

Returns the set of deployable unit identifiers that identify all the deployable units installed in the SLEE. |

Services

As well as an overview of SLEE services, this section includes instructions for performing the following Rhino SLEE procedures with explanations, examples and links to related javadocs:

| Procedure | rhino-console command(s) | MBean → Operation(s) |

|---|---|---|

listservices |

Deployment → getServices |

|

listservicesbystate |

Service Management → getServices |

|

activateservice |

Service Management → activate |

|

deactivateservice |

Service Management → deactivate |

|

deactivateandactivateservice |

Service Management → deactivateAndActivate |

|

listserviceralinks |

Deployment → getServices |

|

listsbbs |

Deployment → getSbbs |

|

|

|

Deployment → getDescriptors |

|

getservicemetricsrecordingenabled |

ServiceManagementMBean → getServiceMetricsRecordingEnabled |

|

setservicemetricsrecordingenabled |

ServiceManagementMBean → setServiceMetricsRecordingEnabled |

|

About Services

The SLEE specification presents the operational lifecycle of a SLEE service — illustrated, defined and summarised below.

| |

What are SLEE services?

Services are SLEE components that provide the application logic to act on input from resource adaptors. |

Service lifecycle states

| State | Definition |

|---|---|

INACTIVE |

The service has been installed successfully and is ready to be activated, but not yet running. The SLEE will not create SBB entities of the service’s root SBB, to process events. |

ACTIVE |

The service is running. The SLEE will create SBB entities, of the service’s root SBB, to process initial events. The SLEE will also deliver events to SBB entities of the service’s SBBs, as appropriate. |

STOPPING |

The service is deactivating. Existing SBB entities of the service continue running and may complete their processing. But the SLEE will not create new SBB entities of the service’s root SBB, for new activities. |

| |

When the SLEE can reclaim all of a service’s SBB entities, it transitions out of the STOPPING state and returns to the INACTIVE state. |

Independent operational states

As explained in About SLEE Operational States, each event-router node in a Rhino cluster maintains its own lifecycle state machine, independent of other nodes in the cluster. This is also true for each service: one service might be INACTIVE on one node in a cluster, ACTIVE on another, and STOPPING on a third. The operational state of a service on each cluster node also persists to the disk-based database.

A service will enter the INACTIVE state, after node bootup and initialisation completes, if the database’s persistent operational state information for that service is missing, or is set to INACTIVE or STOPPING.

And, like node operational states, you can change the operational state of a service at any time, as long as least one node in the cluster is available to perform the management operation (regardless of whether or not the node whose operational state being changed is a current cluster member). For example, you might activate a service on node 103 before node 103 is booted — then, when node 103 boots, and after it completes initialisation, that service will transition to the ACTIVE state.

Configuring services

An administrator can configure a service before deployment by modifying its service-jar.xml deployment descriptor (in its deployable unit). This includes specifying:

-

the address profile table to use when a subscribed address selects initial events for the service’s root SBB

-

the default event-router priority for the SLEE to give to root SBB entities of the service when processing initial events.

Individual SBBs used in a service can also have configurable properties or environment entries. Values for these environment entries are defined in the sbb-jar.xml deployment descriptor included in the SBB’s component jar. Administrators can set or adjust the values for each environment entry before the SBB is installed in the SLEE.

The SLEE only reads the configurable properties defined for a service or SBB deployment descriptor at deployment time. If you need to change the value of any of these properties, you’ll need to:

-

uninstall the related component (service or SBB whose properties you want to configure) from the SLEE

-

change the properties

-

reinstall the component

-

uninstall and reinstall other components (as needed) to satisfy dependency requirements enforced by the SLEE.

All Available Services

To list all available services installed in the SLEE, use the following rhino-console command or related MBean operation.

Console command: listservices

Command |

listservices

Description

List the current installed services

|

|---|---|

Example |

$ ./rhino-console listservices ServiceID[name=SIP AC Location Service,vendor=OpenCloud,version=1.7] ServiceID[name=SIP Proxy Service,vendor=OpenCloud,version=1.8] ServiceID[name=SIP Registrar Service,vendor=OpenCloud,version=1.8] |

MBean operation: getServices

MBean |

|

|---|---|

SLEE-defined |

public ServiceID[] getServices() throws ManagementException; This operation returns an array of service component identifiers, identifying the services installed in the SLEE. |

| |

See also Services by State. |

Services by State

To list a specified activation state, use the following rhino-console command or related MBean operation.

Console command: listservicesbystate

Command |

listservicesbystate <state> [-node node]

Description

List the services that are in the specified state (on the specified node)

|

|---|---|

Output |

The activation state of a service is node-specific. If the |

Example |

To list the services in the ACTIVE state on node 102: $ ./rhino-console listservicesbystate Active -node 102 Services in Active state on node 102: ServiceID[name=SIP Proxy Service,vendor=OpenCloud,version=1.8] ServiceID[name=SIP Registrar Service,vendor=OpenCloud,version=1.8] |

MBean operation: getServices

MBean |

|

|---|---|

SLEE-defined |

Get services on all nodes

public ServiceID[] getServices(ServiceState state) throws NullPointerException, ManagementException; Rhino’s implementation of the SLEE-defined |

Rhino extension |

Get services on specific nodes

public ServiceID[] getServices(ServiceState state, int nodeID)

throws NullPointerException, InvalidArgumentException,

ManagementException;

Rhino provides an extension that adds an argument that lets you control the nodes on which to list services in a particular state (by specifying node IDs). |

| |

See also All Available Services. |

Activating Services

To activate one or more services, use the following rhino-console command or related MBean operation.

Console command: activateservice

Command |

activateservice <service-id>* [-nodes node1,node2,...] [-ifneeded]

Description

Activate a service (on the specified nodes)

|

|---|---|

Example |

To activate the Call Barring and Call Forwarding services on nodes 101 and 102: $ ./rhino-console activateservice \ "name=Call Barring Service,vendor=OpenCloud,version=0.2" \ "name=Call Forwarding Service,vendor=OpenCloud,version=0.2" \ -nodes 101,102 Activating services [ServiceID[name=Call Barring Service,vendor=OpenCloud,version=0.2], ServiceID[name=Call Forwarding Service,vendor=OpenCloud,version=0.2]] on node(s) [101,102] Services transitioned to the Active state on node 101 Services transitioned to the Active state on node 102 |

MBean operation: activate

MBean |

|

|---|---|

SLEE-defined |

Activate on all nodes

public void activate(ServiceID id)

throws NullPointerException, UnrecognizedServiceException,

InvalidStateException, InvalidLinkNameBindingStateException,

ManagementException;

public void activate(ServiceID[] ids)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

InvalidLinkNameBindingStateException, ManagementException;

Rhino’s implementation of the SLEE-defined |

Rhino extension |

Activate on specific nodes

public void activate(ServiceID id, int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

public void activate(ServiceID[] ids, int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

Rhino provides an extension that adds a argument to let you control the nodes on which to activate the specified services (by specifying node IDs). For this to work, the specified services must be in the INACTIVE state on the specified nodes. |

| |

A service may require resource adaptor entity link names to be bound to appropriate resource adaptor entities before it can be activated. (See Getting Link Bindings Required by a Service and Managing Resource Adaptor Entity Link Bindings.) |

Deactivating Services

To deactivate one or more services on one or more nodes, use the following rhino-console command or related MBean operation.

Console command: deactivateservice

Command |

deactivateservice <service-id>* [-nodes node1,node2,...] [-ifneeded]

Description

Deactivate a service (on the specified nodes)

|

|---|---|

Example |

To deactivate the Call Barring and Call Forwarding services on nodes 101 and 102: $ ./rhino-console deactivateservice \

"name=Call Barring Service,vendor=OpenCloud,version=0.2" \

"name=Call Forwarding Service,vendor=OpenCloud,version=0.2" \

-nodes 101,102

Deactivating services [ServiceID[name=Call Barring Service,vendor=OpenCloud,version=0.2],

ServiceID[name=Call Forwarding Service,vendor=OpenCloud,version=0.2]] on node(s) [101,102]

Services transitioned to the Stopping state on node 101

Services transitioned to the Stopping state on node 102

|

MBean operation: deactivate

MBean |

|

|---|---|

SLEE-defined |

Deactivate on all nodes

public void deactivate(ServiceID id)

throws NullPointerException, UnrecognizedServiceException,

InvalidStateException, ManagementException;

public void deactivate(ServiceID[] ids)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

Rhino’s implementation of the SLEE-defined |

Rhino extension |

Deactivate on specific nodes

public void deactivate(ServiceID id, int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

public void deactivate(ServiceID[] ids, int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

Rhino provides an extension that adds an argument that lets you control the nodes on which to deactivate the specified services (by specifying node IDs). For this to work, the specified services must be in the ACTIVE state on the specified nodes. |

Console command: waittilserviceisinactive

Command |

waittilserviceisinactive <service-id> [-timeout timeout] [-nodes node1,node2,...]

Wait for a service to finish deactivating (on the specified nodes) (timing out after N seconds)

|

|---|---|

Example |

To wait for the Call Barring and Call Forwarding services on nodes 101 and 102: $ ./rhino-console waittilserviceisinactive \

"name=Call Barring Service,vendor=OpenCloud,version=0.2" \

"name=Call Forwarding Service,vendor=OpenCloud,version=0.2" \

-nodes 101,102

Service ServiceID[name=Call Barring Service,vendor=OpenCloud,version=0.2] is in the Inactive state on node(s) [101,102]

Service ServiceID[Call Forwarding Service,vendor=OpenCloud,version=0.2] is in the Inactive state on node(s) [101,102]

|

Upgrading (Activating & Deactivating) Services

To activate some services and deactivate others, use the following rhino-console command or related MBean operation.

| |

Activating and deactivating in one operation

The SLEE specification defines the ability to deactivate some services and activate other services in a single operation. As one set of services deactivates, the existing activities being processed by those services continue to completion, while new activities (started after the operation is invoked) are processed by the activated services. The intended use of this is to upgrade a service or services with new versions (however the operation does not have to be used strictly for this purpose). |

Console command: deactivateandactivateservice

Command |

deactivateandactivateservice Deactivate <service-id>* Activate <service-id>*

[-nodes node1,node2,...]

Description

Deactivate some services and Activate some other services (on the specified

nodes)

|

|---|---|

Example |

To deactivate version 0.2 of the Call Barring and Call Forwarding services and activate version 0.3 of the same services on nodes 101 and 102: $ ./rhino-console deactivateandactivateservice \

Deactivate "name=Call Barring Service,vendor=OpenCloud,version=0.2" \

"name=Call Forwarding Service,vendor=OpenCloud,version=0.2" \

Activate "name=Call Barring Service,vendor=OpenCloud,version=0.3" \

"name=Call Forwarding Service,vendor=OpenCloud,version=0.3" \

-nodes 101,102

On node(s) [101,102]:

Deactivating service(s) [ServiceID[name=Call Barring Service,vendor=OpenCloud,version=0.2],

ServiceID[name=Call Forwarding Service,vendor=OpenCloud,version=0.2]]

Activating service(s) [ServiceID[name=Call Barring Service,vendor=OpenCloud,version=0.3],

ServiceID[name=Call Forwarding Service,vendor=OpenCloud,version=0.3]]

Deactivating service(s) transitioned to the Stopping state on node 101

Activating service(s) transitioned to the Active state on node 101

Deactivating service(s) transitioned to the Stopping state on node 102

Activating service(s) transitioned to the Active state on node 102

|

MBean operation: deactivateAndActivate

MBean |

|

|---|---|

SLEE-defined |

Deactivate and activate on all nodes

public void deactivateAndActivate(ServiceID deactivateID, ServiceID activateID)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

InvalidLinkNameBindingStateException, ManagementException;

public void deactivateAndActivate(ServiceID[] deactivateIDs, ServiceID[] activateIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

InvalidLinkNameBindingStateException, ManagementException;

Rhino’s implementation of the SLEE-defined |

Rhino extension |

Deactivate and activate on specific nodes

public void deactivateAndActivate(ServiceID deactivateID, ServiceID activateID, int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

public void deactivateAndActivate(ServiceID[] deactivateIDs, ServiceID[] activateIDs, int[] nodeIDs)

throws NullPointerException, InvalidArgumentException,

UnrecognizedServiceException, InvalidStateException,

ManagementException;

Rhino provides an extension that adds an argument that lets you control the nodes on which to activate and deactivate services (by specifying node IDs). For this to work, the services to deactivate must be in the ACTIVE state, and the services to activate must be in the INACTIVE state, on the specified nodes. |

Getting Link Bindings Required by a Service

To find the resource adaptor entity link name bindings needed for a service, and list the service’s SBBs, use the following rhino-console commands or related MBean operations.

Console commands

listserviceralinks

Command |

listserviceralinks service-id

Description

List resource adaptor entity links required by a service

|

|---|---|

Example |

To list the resource adaptor entity links that the JCC VPN service needs: $ ./rhino-console listserviceralinks "name=JCC 1.1 VPN,vendor=Open Cloud,version=1.0"

In service ServiceID[name=JCC 1.1 VPN,vendor=Open Cloud,version=1.0]:

SBB SbbID[name=AnytimeInterrogation sbb,vendor=Open Cloud,version=1.0] requires entity link bindings: slee/resources/map

SBB SbbID[name=JCC 1.1 VPN sbb,vendor=Open Cloud,version=1.0] requires entity link bindings: slee/resources/cdr

|

listsbbs

Command |

listsbbs [service-id]

Description

List the current installed SBBs. If a service identifier is specified only the

SBBs in the given service are listed

|

|---|---|

Example |

To list the SBBs in the JCC VPN service: $ ./rhino-console listsbbs "name=JCC 1.1 VPN,vendor=Open Cloud,version=1.0" SbbID[name=AnytimeInterrogation sbb,vendor=Open Cloud,version=1.0] SbbID[name=JCC 1.1 VPN sbb,vendor=Open Cloud,version=1.0] SbbID[name=Proxy route sbb,vendor=Open Cloud,version=1.0] |

MBean operations: getServices, getSbbs, and getDescriptors

MBean |

|||

|---|---|---|---|

SLEE-defined |

Get all services in the SLEE

public ServiceID[] getServices()

throws ManagementException;

Get all SBBs in a service

public SbbID[] getSbbs(ServiceID service)

throws NullPointerException, UnrecognizedServiceException,

ManagementException;

Get the component descriptor for a component

public ComponentDescriptor[] getDescriptors(ComponentID[] ids)

throws NullPointerException, ManagementException;

|

Configuring service metrics recording status

To check and configure the status for recording service metrics, use the following rhino-console commands or related MBean operations.

The details for metrics stats are listed in Metrics.Services.cmp and Metrics.Services.lifecycle.

| |

The default is set to disabled for performance consideration. |

Console commands

getservicemetricsrecordingenabled

Command |

getservicemetricsrecordingenabled <service-id>

Description

Determine if metrics recording for a service has been enabled

|

|---|---|

Example |

To check the status for recording metrics: $ ./rhino-console getservicemetricsrecordingenabled name=service1,vendor=OpenCloud,version=1.0 Metrics recording for ServiceID[name=service1,vendor=OpenCloud,version=1.0] is currently disabled |

setservicemetricsrecordingenabled

Command |

setservicemetricsrecordingenabled <service-id> <true|false>

Description

Enable or disable the recording of metrics for a service

|

|---|---|

Example |

To enable the recording metrics: $ ./rhino-console setservicemetricsrecordingenabled name=service1,vendor=OpenCloud,version=1.0 true Metrics recording for ServiceID[name=service1,vendor=OpenCloud,version=1.0] has been enabled |

MBean operations: getServiceMetricsRecordingEnabled and setServiceMetricsRecordingEnabled

MBean |

|

|---|---|

Rhino extension |

Determine if the recording of metrics for a service is currently enabled or disabled.

public boolean getServiceMetricsRecordingEnabled(ServiceID service)

throws NullPointerException, UnrecognizedServiceException, ManagementException;

Enable or disable the recording of metrics for a service.

public void setServiceMetricsRecordingEnabled(ServiceID service, boolean enabled)

throws NullPointerException, UnrecognizedServiceException, ManagementException;

|

Resource Adaptor Entities

As well as an overview of resource adaptor entities, this section includes instructions for performing the following Rhino SLEE procedures with explanations, examples and links to related javadocs:

| Procedure | rhino-console command | MBean → Operation |

|---|---|---|

listraconfigproperties |

Resource Management → getConfigurationProperties |

|

createraentity |

Resource Management → createResourceAdaptorEntity |

|

removeraentity |

Resource Management → removeResourceAdaptorEntity |

|

listraentityconfigproperties |

Resource Management → getConfigurationProperties |

|

updateraentityconfigproperties |

Resource Management → updateConfigurationProperties |

|

activateraentity |

Resource Management → activateResourceAdaptorEntity |

|

deactivateraentity |

Resource Management → deactivateResourceAdaptorEntity |

|

reassignactivities |

Resource Management → reassignActivities |

|

getraentitystate |

Resource Management → getState |

|

listraentitiesbystate |

Resource Management → getResourceAdaptorEntities |

|

bindralinkname |

Resource Management → bindLinkName |

|

unbindralinkname |

Resource Management → unbindLinkName |

|

listralinknames |

Resource Management → getLinkNames |

About Resource Adaptor Entities

Resource adaptors (RAs) are SLEE components which let particular network protocols or APIs be used in the SLEE.

They typically include a set of configurable properties (such as address information of network endpoints, URLs to external systems, or internal timer-timeout values). These properties may include default values. A resource adaptor entity is a particular configured instance of a resource adaptor, with defined values for all of that RA’s configuration properties.

The resource adaptor entity lifecycle

The SLEE specification presents the operational lifecycle of a resource adaptor entity — illustrated, defined, and summarised below.

Resource adaptor entity lifecycle states

The SLEE lifecycle states are:

| State | Definition |

|---|---|

INACTIVE |

The resource adaptor entity has been configured and initialised. It is ready to be activated, but may not yet create activities or fire events to the SLEE. Typically, it is not connected to network resources. |

ACTIVE |

The resource adaptor entity is connected to the resources it needs to function (assuming they are available), and may create activities and fire events to the SLEE. |